Approaches To Visual Perception David Marr Theory (Part-2)

We are still talking about various approaches to visual perception, Approaches To Visual Perception David Marr Theory (Part-2), today we will talk about David Marr’s theory of perception which is more commonly known as the 2.5 d sketch approach.

How the brain was able to take the information sensed by the eyes and turn it into an accurate, internal representation of the surrounding world? The possible mechanisms to address this issue aren’t well explained in Gibson’s approach. In this lecture, we will address the gap in Gibson’s approach by introducing Marr’s information processing approach, where he talked about the analysis of the retinal images in four distinct states, viz., Grey level description, and Primal sketch, 2.5D sketch, and 3D object centered description.

Okay before we move further let me quote David mar from one of his papers in 1982 he says he was basically talking about how Gibson had approached perception and this quotation is in that reference so he says the detection of physical invariants like image.

Marr’s Theory of Perception

Surfaces are exactly precisely an information processing problem in modern terminology and second Gibson vastly underrated the shared difficulty of such detection detecting physical invariance is just as difficult as Gibson feared but nevertheless we can do it and the only way to understand how is to treat this as an information processing problem uh now you see David Maher is basically you know talking more about a computational approach to the perception he’s basically talking about treating this problem of perception as a problem of information processing In today’s article.

How do you really take up information from this external world? How do you work upon it? and How does that information lead to the end product that is the perception that is pretty much what David mar is talking about?

Now while Gibson identified the need for invariance you have seen horizontal ratio relation and you have seen textures or surfaces you have seen the thing of flow and all while Gibson identified the need for invariance for solving the problem of visual perception.

He does not really specify the possible mechanisms of how this information has to be picked up from these invariants he says if you remember the last lecture, depending upon these sizes etcetera we can get information, but he does not really say how are we doing that he says info you know you do I look at the surfaces whether the width is smaller or larger and you can make out whether the surface is receding or proceeding but how is this exactly done is not what Gibson really talks about.

Gray Level Description

So to address this gap about you know how this process has to be done a particular theory was needed that attempted to explain how these processes will be done how does the brain take up information you know sensed by the eyes and turn it into an accurate internal representation of the surrounding world such a theory was put forward by david maher before we move into more detail about ma let me point out a couple of commonalities between gibsons and David marr approaches like gibson mar also suggested that the information from the senses is sufficient to allow perception to occur mar adopted an information processing approach in which the processes responsible for analyzing the retinal image was central so he’s again saying light from the external world falling on the retina is the primary source of information and this is the starting point of perception and this is how perception supposed to be built mass theory is therefore also strongly bottom up if you remember the distinction between top down and bottom up approaches mentioned in the last lecture in that it sees the retinal image as a starting point of perception and also explores how this image might be analyzed in order to produce a description of the environment note that mar is not really concerned about perception for action rather he is concerned about perception for recognition or perception that is meant to build the description of the world mar basically saw the analysis of the retinal image in four distinct stages within each stage taking the output of the previous one and performing a new analysis so it’s basically an incremental process of perception that mar will talk about you’ll see something happens in the first stage then the output is processed further in the second stage and so far.

Let us have a brief look at these stages before we go and elaborate upon them so the first stage is a gray level description which is basically, wherein you are measuring the intensity of light you know at each point in the retinal image the second is the generation of the primal sketch wherein first there are two phases here so first in the raw primal sketch areas could potentially correspond to the edges and textures of the objects that are identified then you move on to construct.

A full primal sketch which is basically you use the areas to generate a description of the outline of any objects in you we will actually elaborate on these in much more detail as we go ahead and just giving you an overview of what really mar was talking about the third stage in David Marr approach is 2.5 d sketch it is at this stage a description of the is formed of how the surfaces in view relate to each other and also to the observer the final stage of mar is basically object centered is a 3d object centered description in this stage descriptions are produced that allow the object to be recognized from any angle so you have the object you can move around you can still recognize the object so it forms basically a very stable object standard representation mark concerned himself mostly at the computational algorithmic levels of analysis and he do not really worry much about the neural hardware that might actually be implicated in doing all of these computations that he was talking about.

Now let us try and elaborate on these stages, you will have to kind of follow this in more detail in order to understand this entire sequence of events so the first step in this sequence of events is building up a gray-level description of what the gray level description mark thought that color information was processed by a distinct module he says that the process of perception is handled by different module components of processing say for example if you have to see the shape.

It is a different module if you see the color it is a different module if you see, depth and other things are carried over by different modules you might remember in the physiology part we were talking about the different areas in the occipital lobe areas v 1 v 2 v 3 4, etc which are all doing different stuff so mark kind of you know is talking about something similar he says he was actually fascinated by you know this idea in computer science that a particular larger process can be actually split into modules.

So a large combination he says can be split into split up and implemented as a collection of parts that are nearly as independent of one another as this entire task is and you are so moved by this that it actually elevated this to a particular principle which was later referred to as the principle of modular design.

If you remember one of our most you know earlier lectures we have been talking about this principle of modularity in great detail now the first stage in mass theory is basically, to produce a description containing the intensity of or the intensity distributions of light at different points in the retina how is this done, this basically is say for example the way it is done is that it is possible to derive the intensity of the light striking at each part of the retina because as light strikes a cell in a retina the voltage across the cell membrane changes and the size of this change how much this voltage is changing corresponds to the intensity of the light if you remember the chapter on physiology we’ve been talking about neural impulses and how depolarization polarization happens it’s a bit about that but what you need to understand for the moment is just that the amount of change that happens in a neuron in the retina is corresponding to the intensity of the light that is falling upon it so using this information you can actually construct a description of you know any surface with respect to whatever you know intensity of light has fallen upon it.

The Primal Sketch

So a grayscale description basically is produced by a pattern of depolarization on the retinas you get a description which basically contains only the intensity information of the scene or of the surface that you are looking at now moving to the second part the primal sketch the generation of the primal sketch basically occurs in two parts in the first part basically the forming of a raw primal sketch happens from the gray level description by identifying the patterns of changing intensity so in the first stage you had this you had a description of what are the different levels of intensity in the surface or in the scene in the second level you are actually taking into account how this intensity is changing throughout the surface or throughout the scene changes in intensity of the reflected light can be grouped into three categories so relatively large changes in intensity can be produced by the edge of an object smaller changes in intensity can be caused by the parts and the texture of the object even smaller changes in intensity might be just because of random fluctuations in the light etc marin hildreth actually they worked together and proposed an algorithm that could be used to determine which intensity changes corresponded to the edges of the objects meaning that changes in intensity due to the random fluctuation could be discarded this algorithm made use of a technique called gaussian blurring which involves averaging the intensity values in circular regions of the gray scale description.

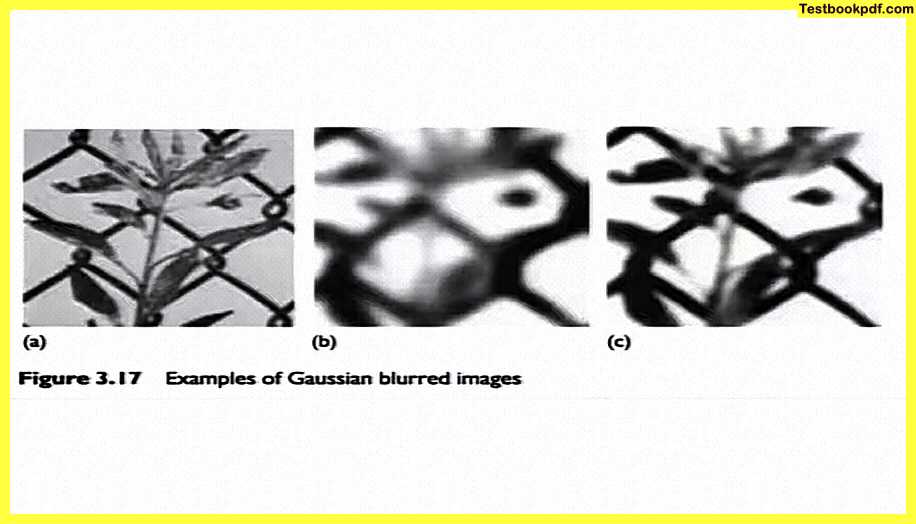

So basically it’s like you know the algorithm works upon the intensity values that it gets from the description and this is basically done over circular regions of this grayscale I will just show you a figure that talks about this the values at the center of the circle are weighed more are weighted more than those at the edges of this circle I just in a way identical to a normal distribution so if you look at if you have the concept of how normal distribution looks it basically like a bell-shaped curve the highest intensity is at the center the lower intensities kind of are towards outside by changing the size of this circle in which intensity values are average it is possible to produce a range of images blurred to different degrees so let us have a look at this figure.

Gaussian Blurred Images

Here you can see the main picture is figure A the figure B and figure c are blurred to slightly different degrees, okay martin Hillard’s algorithm really basically works by comparing the images that have been blurred to different degrees now if an intensity change in these different you know figures uh is visible at two or more adjacent levels of blurring so, for example, you blur something up to ten percent and twenty versus thirty percent if these images if intensity change is visible at two or more adjacent levels of clearing then it is assumed that it cannot correspond to random fluctuations and must relate to an edge of the object.

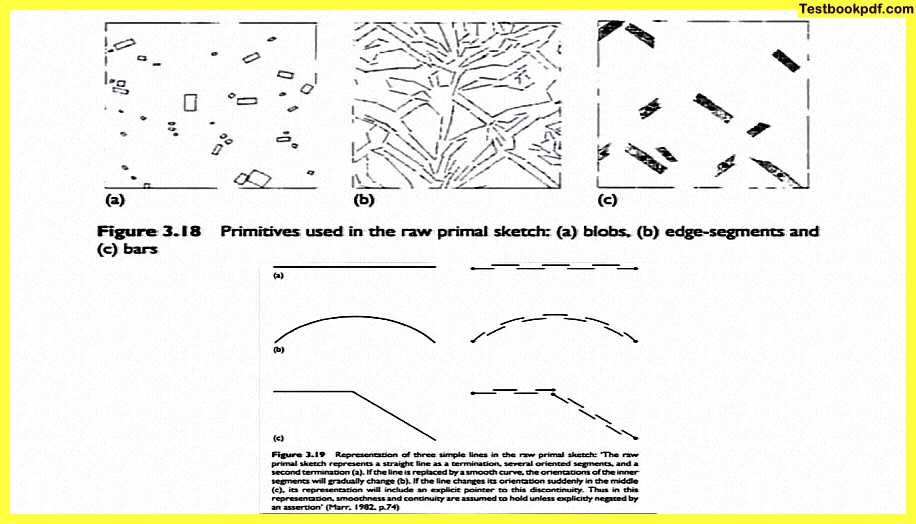

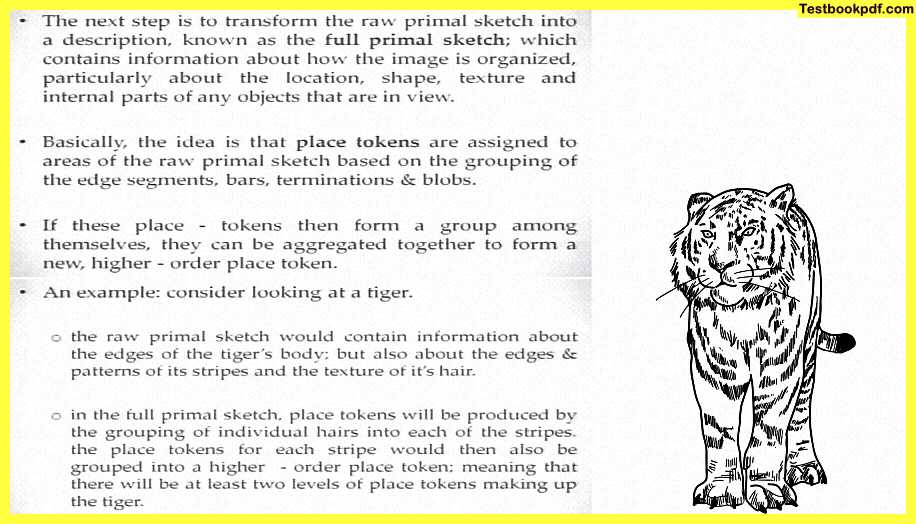

So you just have intensity data you have data about how this pattern of intensity is changing and you can see how these computations are cumulatively telling us important information about what is out there what does the object or the scene look like although this algorithm was implemented on a computer there is also evidence that shows that retinal processing delivers descriptions that have been actually blurred to different degrees so it could be kind of an evidence in support of you know the way mar was stipulating this process is happening now by analyzing this changes intensity values in the blurred images it is actually possible to form a symbolic representation consisting of four primitives corresponding to four types of intensity change so how do you you know really construct this kind of an image say for example if you want to look at edge segments they should represent a really sudden change in intensity if you look at a bar kind of a thing it basically should represent two parallel edge segments.

So two sudden changes in intensity a termination of any surface say for example the edge of this table here can represent sudden discontinuity also if you’re talking about a particular object which can correspond to a small enclosed area bound by changes in intensity say for example you’re looking at A face or you’re looking at say for example face still has a lot many conduits if you’re looking at a picture of an orange.

So it has contours inside is just looking like a blob here again you can see the flower is basically more looking like a blob while the steel wires at the back of it are actually more looking like edges you can see this more clearly here you can see a picture a has a lot of blobs picture b has a lot of edge segments and picture c which is basically those wires have a lot of bars you can see how this can be used on this straight lines or curved lines in that sense now the next step after you’ve got this intensity description is to transform a raw primal sketch into a description known as the full primal sketch.

Just to recap what we have done is we have done a grayscale description then we have come to a raw primal sketch which is basically formed by analyzing the patterns of changes in this intensity now what we have to do is we have to move towards forming a full primal sketch what is full primal sketch it contains information about how the image is organized particularly about the location the shape texture and internal parts of any objects that are on view basically the idea is that you will have some things called you know place tokens what are place tokens they are assigned.

The areas of raw primal sketch based on grouping of edge segments grouping of bars terminations and blobs so once you have done the analysis like say for example in this figure here now would what you would want to do is you would want to group things together that is what one needs to do in while forming the full primal sketch so basically yeah so if these place tokens then form a group among themselves they can be aggregated together to form a new higher order place token.

So there could be different levels at which these place tokens would really appear we can understand this better by looking at this example say for your for example you are looking at a tiger and how is this analysis happening so the raw primal sketch of the tiger would contain information both about the edges of the tiger’s body that is the contours but also there will be information about the edges of the tiger’s stripes so tiger is basically entirely covered in stripes but then there is also at a higher level the contour of the tiger.

So in the full primo sketch place tokens will be produced by the grouping of individual hairs into each of the stripes so you will have information about the stripes then say for example the place tokens for each stripe would also be grouped at a higher level in a higher order place token meaning that there will be at least two levels of place tokens making up the tiger one level which is just making up the stripes the other level based on the stripes making of the entire contour of the tiger now various mechanisms have been postulated various mechanisms exist for grouping the raw primal sketch components into place tokens and then grouping the place tokens together to form the full primal sketch some of these could include things like clustering wherein tokens that are close to one another are grouped in a very similar in a way very similar to the gestalt principle of proximity proximity basically means that you have you know you put things that are close together in one object okay another thing would be curvilinear aggregation which is basically that tokens which are related to each other which have similar alignments are also grouped or clustered together.

Say for example if you are seeing a particular line you are more likely to see that line continuing than you know breaking at different places we will talk about these gestalt principles in more detail as we move in the later lectures now the third stage the third stage is the generation of the 2.5 d sketch now David marr modular approach to perception basically means that while the full primal sketch is being produced other kinds of visual information are also being organized or analyzed simultaneously for example things like depth relations distance information between a surface and the observer information about whether the object is moving or you are moving in those kind of things now mark basically proposed that the information from all of such modules distance from shape color motion all of these modules will be combined together to form what is called a 2.5 dimension sketch it is also called the 2.5 dimension sketch because the specification of the position and the depth of the surfaces and objects is done in relation to the observer now this is the view of the object in relation to the observer that is why this is also called a viewer centered representation an object as it is looking to me ok the image of the object that is falling on my retina this will not contain any information about the object that is not present in the retinal image.

The viewer-centered image is later turned into a fully 3d object centered represent representation which will discuss in one of the later lectures mar basically saw the 2.5 d sketch as consisting of a series of primitives that contained vectors showing the orientations of each surface so once you have these you know surfaces you actually you know can combine all of them together and the 2.5 d sketch will appear as a series of primitives that contain these vectors you can actually understand this by looking here so if you are looking at it here you are looking at the 2.5 d sketch of a particular cube.

So you can see these vectors pointing in the three directions are actually telling us the direction of the surface or the orientation of the surface let us try and evaluate this approach let us look back and see what mar was talking about now a lot of research has followed David Marr theory some of it actually confirming his proposed mechanisms while some of them have found out some a few shortcomings Marin Hildreth’s proposal of a premium sketch being found by looking for changes in intensity worked very well with computer Stimulations computer simulations but it could not really be guaranteed.

2.5D Sketch

That this is the same process followed by the human visual system you would have seen that we have been discussing in one of the earlier lectures about there could be two possible descriptions of the same outcome ends in Resnick 1990 they showed that the participants of the study could also use three dimensional information instead of only the two dimensional information that is needed to form a full primal sketch so probably you know in some sense the underestimated the efficiency of our perceptual systems however mar proposal of the integration of depth cues in 2.5 d sketch was actually supported by experiments that were done by young and colleagues in 1993 who basically reported that the perceptual system does process this cues separately so and we also make use selective use of them depending on how noisy they are so we are actually using these you know the perception system is actually using these cues these invariant features or these depth cues in order to you know develop a really rich representation of the external world around us and of the objects etc.

At the End

So this was all about David Marr theory of perception let us try and sum this up we have talked about David Marr 2.5 d approach to perception we have seen that information from the sensory experience can actually be systematically analyzed to construct a good perceptual representation of the world by good i mean a rich perceptual representation of the world that has all the knowledge that is needed for you to first construct a visual representation of the world and because at least mar was not really concerned with about perception for actions we not talked about that in this part however there were indeed shortcomings and gaps in linking this kind of a computational approach to match human performance that is what has been found out after you know a lot of research conducted on David Marr principles but nonetheless this was a good and well-rounded computational approach about visual perception in the next lectures we will talk about some other kinds of approaches to how visual perception is achieved thank you.

Read also:

Representation In Perception Psychology

Approaches to Visual Perception Pdf (J.J Gibson’s theory)

Click here for Complete Psychology Teaching Study Material in Hindi – Lets Learn Squad